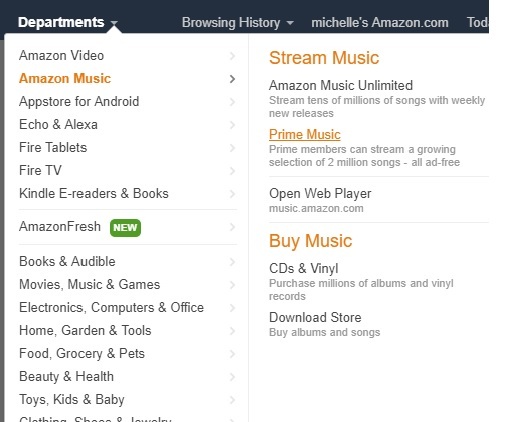

1. Make Sure Your Site Navigation Is Search Engine Friendly

Using Flash for navigation on your website can be bad news if you aren’t aware of how to make Flash objects accessible and web-crawler-friendly. Search engines have a really tough time crawling a website that uses Flash.

CSS and unobtrusive JavaScript can provide almost any of the fancy effects you are looking for without sacrificing your search engine rankings.

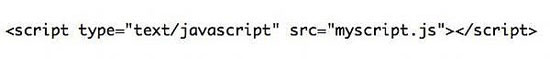

2. Place Scripts Outside of the HTML Document

When you are coding your website, make sure you externalize JavaScript and CSS.

Search engines view a website through what’s contained in the HTML document. JavaScript and CSS, if not externalized, can add several additional lines of code in your HTML documents that, in most cases, will be ahead of the actual content and might make crawling them slower. Search engines like to get to the content of a website as quickly as possible.

3. Use Content That Search Engine Spiders Can Read

Content is the life force of a website, and it is what the search engines feed on. When designing a website, makes sure you take into account good structure for content (headings, paragraphs, and links).

Sites with very little content tend to struggle in the search results and, in most cases, this can be avoided if there is proper planning in the design stages. For example, don’t use images for text unless you use a CSS background image text replacement technique.

4. Design Your URLs for Search Friendliness

Search friendly URLs are not URLs that are hard to crawl, such as query strings. The best URLs contain keywords that help describe the content of the page. Be careful of some CMS’s that use automatically generated numbers and special code for page URLs. Good content management systems will give you the ability to customize and “prettify” your website’s URLs.

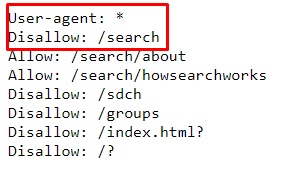

5. Block Pages You Don’t Want Search Engines Index

There could be pages on your site that you don’t want search engines to index. These pages could be pages that add no value to your content, such as server-side scripts. These web pages could even be pages you are using to test your designs as you are building the new website (which is not advised, yet many of us still do).

Don’t expose these web pages to web robots. You could run into duplicate content issues with search engines as well as dilute your real content’s density, and these things could have a negative effect on your website’s search positions.

The best way to prevent certain web pages from being indexed by search engine spiders is to use a robots.txt file, one of the five web files that will improve your website.

If you have a section of your website that is being used as a testing environment, make it password-protected or, better yet, use a local web development environment such as XAMPP or WampServer.

6. Don’t Neglect Image Alt Attributes

Make sure that all of your image alt attributes are descriptive. All images need alt attributes to be 100% W3C-compliant, but many comply to this requirement by adding just any text. No alt attribute is better than inaccurate alt attributes.

Search engines will read alt attributes and may take them into consideration when determining the relevancy of the page to the keywords a searcher queries. It is probably also used in ranking image-based search engines like Google Images.

Outside of the SEO angle, image alt attributes help users who cannot see images.

7. Update Pages with Fresh Content

If your website has a blog, you may want to consider making room for some excerpts of the latest posts to be placed on all of your web pages. Search engines love to see content of web pages changing from time to time as it indicates that the site is still alive and well.

With changing content, comes greater crawling frequency by search engines as well.

You won’t want to show full posts because this could cause duplicate content issues.

8. Use Unique Meta Data

Page titles, descriptions, and keywords should all be different. Many times, web designers will create a template for a website and forget to change out the meta data, and what ends up happening is that several pages will use the original placeholder information.

Every page should have its own set of meta data; it is just one of the things that helps search engines get a better grasp of how the structure of the website is constructed.

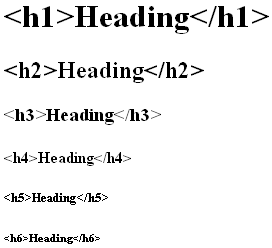

9. Use Heading Tags Properly

Make good use of heading tags in your web page content; they provide search engines with information on the structure of the HTML document, and they often place higher value on these tags relative to other text on the web page (except perhaps hyperlinks).

Use the < h1> tag for the main topic of the page. Make good use of < h2> through < h6> tags to indicate content hierarchy and to delineate blocks of similar content.

We don’t recommend using multiple

< h1>tags on a single page so that your key topic is not diluted.

10. Follow W3C Standards

Search engines love well-formed, clean code (who doesn’t?). Clean code makes the site easier to index, and can be an indicative factor of how well a website is constructed.

Following W3C standards also almost forces you to write semantic markup, which can only be a good thing for SEO.